Overview

The word "DevOps" is the coalescence of two terms "Development" and "Operations". Today, a majority of the people have a perplexity on the DevOps concept whether it is a culture, movement, approach, or a blend of all these things. Here in this DevOps Tutorial, we have got you covered with all your crux over the DevOps concepts in sequential order. This DevOps Tutorial helps you to have a fair understanding of the DevOps concepts, tools, technologies, and other important approaches that are associated with it.

Intended Audience: This DevOps Tutorial series is compiled with the intent to make beginners familiar with DevOps Concepts who aspire to head-start their career in the IT domain.

Prerequisites: There are no requisites needed for learning the DevOps concepts as this DevOps tutorial for beginnersguides you right from the basics. Yet, it is advisable to have a prior understanding of the Linux and Scripting fundamentals.

What is DevOps?

Today a predominant of IT companies have deployed the DevOps as a way to forward in the march of competition that is prevalent in the market. Here in this DevOps Tutorial for Beginners first let understand What is DevOps?

The term DevOps is the blend of two words "Development" and "Operations" This is the kind of practice that permits a single team to handle the complete application of the development cycle namely development, testing, monitoring, and deployment. Eventually, DevOps aims to reduce the development life cycle whilst rendering the features, updates, and fixes in harmony with the business goals and objectives.

Adopting the DevOps culture alongside its tools and practices, the organizations shall be able to respond to their customer's requirements at ease and shall boost the performance of the application significantly, and thus support reaching the business goals at a rapid pace. A DevOps consists of different stages and they are -

- Continuous Deployment

- Continuous Integration

- Continuous Testing

- Continuous Deployment

- Continuous Monitoring

Needs to Learn DevOps

DevOps is the method of Software development where the development and the operation team collaborates at every stage of the software development cycle. Below in this DevOps Tutorial for Beginners, we have enlisted the important needs to learn DevOps,

- It has made innovative and remarkable changes in the practice of Software Development. The complete team takes part in the development process and it aims for the common goal.

- When there is continuous integration, then there is a consequent reduction in the manual processes that are involved in the development and testing stages.

- It accelerates the chances to work with efficient team members where the knowledge sharing would be significantly higher and helps to have a cordial relationship among the team members.

- Last but not the least, with never-ending changes in the IT industry, the demand for skilled DevOps professionals is expected to increase tremendously.

History of DevOps

Having seen what DevOps is and the need to learn DevOps. In this DevOps Tutorial, we will guide you deep into the Core of DevOps and how it came into the scenes in the industry. Before introducing the DevOps the Software Development industry had two main approaches and they are - Waterfall and Agile model of development.

Waterfall Model

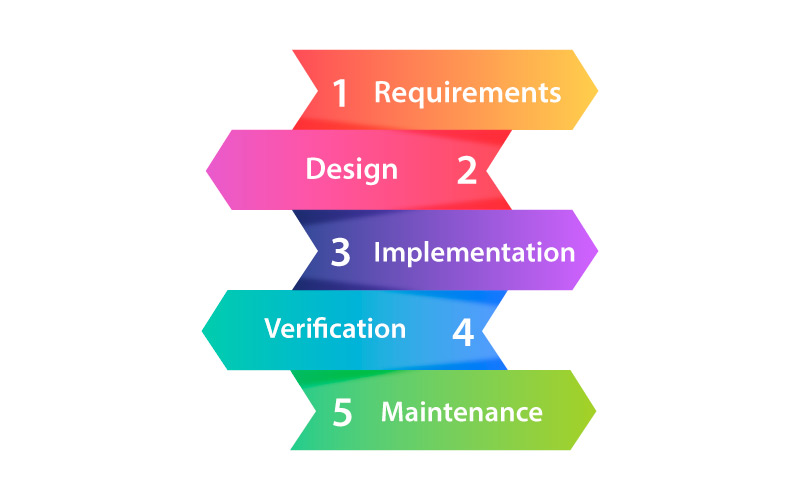

- This is the kind of Software Development model which is direct and linear. The Waterfall Model follows the approach of the top-down method.

- Also, this model has different starting with the requirement analysis and gatherings. This is the stage where you gather all the requirements from the clients to develop the application.

- The next stage is the Designing stage where you are required to prepare the Blueprint for the software. Here, you can sketch out the designs as how your software is going to look like.

- Once when the designs are done, they can further be mapped to the Implementation phase, where the coding for the application starts. And here, the team of developers coordinates and works together on different components of an application.

- In case if you are done with the application development process, then now you are required to test the developed application in the verification stage. There are different tests conducted on the applications namely integration testing, unit testing, and performance testing.

- Once when you are done with all the test processes of the application, then they are deployed in the production servers.

- Finally, comes the Maintenance stage, here in this stage the application is scrutinized to ensure its performance. Also, any issues that are associated with the performance of an application are dealt with in this phase.

Upsides of the Waterfall Model

- It is simple and easy for understanding and using it

- It allows performing analysis and testing

- It saves maximum time and money

- This is mainly preferable for smaller projects

- The Waterfall model allows the access of Managerial and Departmentalization of control

Downsides of Waterfall Model

- It is highly uncertain and risky

- There is a lack of visibility on the current progression

- It is not preferable when the requirements constantly modify

- It is strenuous to make changes in the products when they are in the testing phase

- You can find the end product only after the complete end of the cycle

- It is not recommended for complex and larger products

Agile Methodology

It is an iteratively based software development approach where the software project is broken into different sprints and iterations. All the iteration consists of the phases that are found in the waterfall model namely - gathering, requirements, designs, testing, development, and maintenance. The total span of every iteration is 2-8 weeks.

Process of the Agile Model

- The Agile company launches the application with high-priority features in its first iteration.

- Once the release is over, the customers or the end-users provide feedback on the performance of an application

- Also, we can make the required changes that are found in the application with features and applications that are launched again with the second edition

- You could repeat the complete process until you have reached the specific software quality

Upsides of the Agile Model

- This response to the needs and changes more adaptively and suitably

- Also, fixing the errors at the early phase of development helps the process to be more cost-efficient

- It enhances the quality of the product and thus makes them error-free

- It permits direct communication among the people who are involved in the Software Development project

- It is highly recommended for the big and long-term projects

- Also, the Agile model needs only minimum resources and this is very easy to handle

Downsides of the Agile Model

- It is highly dependent on the customer needs

- It is not easier to predict the effort and time of the bigger projects

- It is not preferable for complex projects

- It struggles more with the document efficiency

- It helps in maintaining the risks

Even though with the advancing Agile methodology, the Operations and Development teams in an organization remained siloed for many years. And here came DevOps, the next big transformation of collaboration of practices and tools for releasing better software at a faster pace. Initially, the DevOps movement began between 2007-2008. During that time the Software Development and IT Operation team strongly felt that there was a catastrophic level of dysfunction in the industry. Also, they fumed the traditional method of Software development model as the coders and as well as testers were functionally and organizationally different.

Furthermore, the Developers and the Operating professionals in the past had a separate department, leadership, objectives, goal, performance methods, and they were assessed on a different basis. Often these professionals were under different roofs or buildings. Also, the results were siloed to the specific teams. However, DevOps has the flexibility of reaching every stage of both the Development and Operation lifecycle. Right from planning to building and monitoring to iterating, DevOps get in together the processes, skills, and tools for every phase of development and operation process in the IT organization.

The DevOps entrust the team to test, build, and deploy at a faster pace with supreme quality. It is possibly achieved owing to the tools that are offered by it and this DevOps culture immensely blended with the Corporate culture and ideology which will help the organizations to move further. The true power of DevOps could be reached only when there is good communication and understanding between the team members for reaching the shared goals. The DevOps Training in Chennai at FITA Academy helps the students of the program to have a holistic understanding of the DevOps concepts and its tool under the mentorship of real-time professionals.

Applications of DevOps

DevOps is not only used by the developers and operators. Rather this is used by the administrators such as project managers, test engineers, and in the different segments by the administrators. There are numerous practices that permit the organization to offer faster and more reliable updates for its customers. The DevOps core aspect revolves around the Agile principle, which is the significant influencer of DevOps concept creation. Below we have listed down the applications,

Microservices: It is a well-planned approach that supports building single apps as a package for small services. All the services in these applications are capable of communicating through well-defined interfaces. It utilizes the lightweight mechanism which is mostly the HTTP-oriented API.

Infrastructure as Code: This is the practice on which the Software Development codes and techniques are built and the infrastructure is handled. Usually, the developers and system administrators shall communicate through the API-driven models of the Cloud. Rather than configuring and setting up them manually, the IaC communicates with the infrastructure programmatically.

Monitor and Logging: Mostly the enterprises inspect the logs and metrics to check the roots and the application's performance to measure the end-user experience. Also, active monitoring is crucial to ensure that the services are there 24/7 without any interruptions.

Continuous Integration: It constantly indicates the repeated testing and merging. Continuous integration intends to identify the bugs and defects at an earlier stage to enhance performance. Also, it significantly reduces the time that is taken for validating and releasing the updates on the software.

Collaboration and Communication: One of the prime goals of DevOps is to promote better collaboration and communication. The automation and tooling of the software render the process by bringing in the workforce under one roof of operation and development. It helps in boosting the communication among the departments. Also, teamwork permits the potent accomplishment of any assigned tasks.

DevOps in Networking: The doctrine of the DevOps concept is alluring and it is harvesting unprecedented popularity in handling Networking services. With the aid of the vendor hardware, deployment modes, automation of the network functions, devices, and configuration tools it has just become an easier job for the professionals to deploy it.

DevOps in Data Science: The companies are persistently working hard to become more buoyant. And thus more organizations are switching to DevOps to deploy the codes robustly and efficiently. And it makes use of the integrated method and successively deploys the plan of the Data Science in the production. Also, it uses the perfect direction for robust implementations.

DevOps in Testing: Based on a survey report gathered from the RightScale, it is stated that numerous companies have preferred DevOps for testing. It is done for reaching agility and speed as it is more essential to automate the complete process of the configuration and testing. The complete function of DevOps is entrusted on the "Agile Manifesto". And yet the root of the proficient strategy is called the "DevOps Trinity" and it is:

People and Culture: When you adopt DevOps, it helps in eliminating all the differences among the teams. Also, they mainly work on a common goal. The main purpose of DevOps is to gain quality software.

Tools and Technologies: DevOps is one of the sustainable and adaptable models with a range of technology and tools. It allows the complete process of the operation and development to be a much easier one.

Processes and Practices: Both the DevOps and the Agile go together. Thus, on the deployment of Agile, Scrum, Kanban, or Plus automation the organizations were able to streamline the processes in a replay.

DevOps Architecture

In the arena of Software Engineering, both the Development and the Operation team occupy a vital role in application delivery. Generally, the Development team constitutes the administrative services, processes, and support of the software. When both the Development and the Operation teams are joined together for collaborating, the DevOps architecture comes into play. The DevOps concept is the only way to bridge the gap between the Development and Operation teams so that the delivery of the software could be achieved rapidly with lesser issues. Here in this DevOps Tutorial for Beginners, we will dive deep into the components of the DevOps architecture.

Generally, the DevOps Architecture is used for hosting the largely distributed applications on the Cloud platform. And the DevOps allows the team to alter their shortcomings flexibly and enhances productivity immensely. Below are the important components of the DevOps and they are;

Planning, Identifying, and Tracking: Upon using the recent project management tools and practices, help the team to track the ideas and the workflows more visually. By doing this the Stakeholders could easily get an overview of the progress and also they could easily alter prioritization to achieve better results. When there is better oversight the Project Managers could ensure that the team is operating on the right track and that they are aware of the near pitfalls and obstacles. Further, the teams could operate together to resolve any issues that are found in the development process.

Continuous Development: The Developers in the initial stage plan, build and execute the code on a different version of the control system namely Git which holds the source code. Also, after the final release, there may be feedback or suggestions which a developer should incorporate into the application. Hence, the continuous process of enhancing the application by the Developer is termed "Continuous Development".

Continuous Testing: Once the code is uploaded into the Source code platform it undergoes the testing phase. Here in this phase, every time the codes are tested and the necessary changes are implemented on those codes before pushing them to the production team.

Continuous Integration: When you complete one stage of the DevOps lifecycle, the application code should be moved immediately to the next stage. This could take place with the aid of the integration tool. The Development practice persistently harmonizes the code right from the first stage to the next level with the support of tools and they are called continuous integration.

Continuous Deployment: After the addition of every feature on the application, it would need a few modifications to the application environment. It is called Configuration Management. To attain this, we should make use of more deployment tools. The process of continuous change on the application environment based on the recent addition of the attribute is termed Continuous Deployment.

Continuous Monitoring: After every testing and planning the bugs may identify their means to the production. We could keep track of those bugs or other inappropriate system behavior. Also, we could keep track of the feature request and monitor the tool persistently to check when and how the application goes through the updates.

Continuous Delivery: Last, but not least, the DevOps architecture is developed on the motto of Continuous Delivery. This means that any practice that is set on the play shall foster collaboration and communication among the teams and it should function toward the constant and routine delivery of the tested software. It could be automated just like continuous deployment as mentioned above.

Advantages of DevOps Architecture

The properly implemented DevOps approach comes with more benefits. It includes the following and they are

Cost Reduction: One of the primary concerns for any business would be the operational cost. The DevOps aids the organizations to keep their cost or expenses at a lower range. Owing to efficiency it gets the boost with the DevOps practice and the Software production enhances the business performance to foresee the overall decrease in the cost of production.

Improved Productivity and Release Time: With the curtailed streamlined processes and development cycles, the teams become more productive and the software is deployed robustly.

Efficient and Time-Saving: The DevOps eases the lifecycle with the earlier iterations that have been growing complex over time. However, with DevOps, the organizations could gather all the requirements at ease. The thing to be noted here is that, in the DevOps, the process of collecting the requirements is streamlined and a culture of collaboration, accountability, transparency meets the requirements in a smooth sail with team efforts. And with this practice, an organization could achieve anything.

Customer Satisfaction: The User Experience and the User feedback is the most important accept of the DevOps culture. By collecting all the details from the clients and then acting based on it helps to ensure that the client's requirements and needs are fulfilled completely to reach new heights in achievements.

Principles and Workflow of DevOps

DevOps was earlier called the mindset and the culture which strongly withholds the collaborative bond among the infrastructure operations and the software development teams. This culture is fundamentally built on the below principles

Gradual Changes: The utilization of the gradual rollouts permits the delivery teams to release the product of the users to have the opportunity to use the updates and the rollbacks when something goes wrong.

Constant Communication and Collaboration: This is the building block of the DevOps concepts ever since its inception. Both the Operation and Development teams should function cohesively and collaboratively to comprehend the requirements and expectations of all the members of the organization.

Sharing of end-to-end Responsibility: All the members of the team should march towards one specific goal and this is responsible equally for a project right from the very beginning till the end that is to facilitate and aid with the need of other member's tasks.

Ease of Problem-solving: DevOps would require the tasks that have to be performed in the early stage of the project lifecycle. So, DevOps mostly concentrate on the tasks of these types and lays the efforts to address these issues more quickly.

Measuring KPIs (Key Performance Indicator): Usually, the Decision-making process must be powered with the factual information in the first stance. It is important to keep track of the progress and activities that make up the DevOps workflow in order to achieve optimum efficiency. To measure the different metrics of the system you should first allow the system to have an understanding of which goes well with the system and what else could be done to enhance the performance.

Automation of Processes: The golden rule of DevOps is to automate as many things as possible like testings, configurations, deployment procedures, and developments. It permits the Specialists to overcome the time-consuming and repetitive work and thus to focus on the other essential activities that could not be automated by nature.

Sharing: The DevOps philosophy in actual highlights the common English phrase "Sharing is Caring''. The DevOps culture highlights nothing but the significance of the collaboration. It is a crucial aspect of any work to share feedback. We can also say that it is one of the best practices to widen your knowledge and skills among your teams and this eventually promotes transparency that develops more collective intelligence and excludes constraints significantly. Also, on adopting the DevOps process, you need not just stop a development process just because only a single person could handle the task efficiently and that person is out of work due to some reasons.

The DevOps Online Training at FITA Academy enables a comprehensive view of the DevOps concepts like - Continuous Integration, Continuous Deployment, Continuous Testing, and Continuous Monitoring under the leading DevOps Experts from the industry. By the end of the training program, the students would acquire a clear understanding of the DevOps concepts.

DevOps Process

Plan: It is the part of the development process where you would organize the schedules, tasks, and setting up of your project management tools. The primary idea is to plan the tasks by using the user story of the process right from the agile methodology. We can also write the tickets in the method of user store for permitting the Operational engineers and Developers to comprehend the development requirements and why it is done.

Code: In this stage, the developer performs coding and reviews the code completely. When the code is ready you can merge them easily. Also, in the DevOps practice is it more important to share the code among the Developer and the Operating engineers.

Build: It is the first where one moves towards automation. The aim here is to build the source code in one desired format, testing, compiling, deploying in the specific place of an infrastructure. Once the setup is done then CI and CD tools could be verified with the support of the Source Code Management and build them.

Test: On performing the Continuous testing process, the organization could easily reduce the risk. The Automatic test makes sure that there are no bugs that are implemented in the production stage. We can implement those testing tools in the workflow to ensure that the best development of quality software is produced.

Release: Every code has to be passed in the testing process only then it is considered to be ready for deployment.

Deploy: The Operational Team deploys the new feature on the production. However, automation is one of the major principles of DevOps and this is possible only to set up with continuous deployment.

Configure/Operate Infrastructure: The Operation team develops or maintains the scalable infrastructure and the infrastructure as code to evaluate the log management and security control issues.

Monitor: This is the most important step in the DevOps that permits fixing all the incidents which have issues at a faster pace. This eventually enables the users to have a better user- experience.

Since DevOps aims to improve the satisfaction level of your customers, the team could naturally begin the steps over and over with the addition of a new feature on your software or application. This is the major reason why DevOps is considered the endless loop of automation.

DevOps Automation

Today, Automation is the key factor that occupies an important role in DevOps. The question here is how you can in reality place the automation in the practice to advance your goals in DevOps. Here in this DevOps Tutorial session, we have explained in-depth what automation indicates here and the context of automation in DevOps, and the different practices that could be automated to reach the DevOps Automation.

With the never-ending evolution in the technology field, Software Development teams are always under pressure to cope with the growing demand and customer expectation of the business applications. The general expectations are:

- Enhanced Performance

- Extended functionality

- Offer guaranteed uptime and availability

Also, all the Traditional Software Development processes have shifted themselves with the cloud-based applications with the advent of technology. The present paradigm is focused more on developing the software more as an ongoing service instead of creating them simply for specific customer requirements. Software development has come a long way from monolithic to agile structure, where it is possible to develop the software constantly.

Automation

In DevOps, the term automation means getting rid of the need of human engineers for intruding physically to facilitate DevOps practices. In the conceptual aspect, we could perform the DevOps processes namely Continuous Integration(CI), Continuous Delivery(CD), and log in the analytics manually. In doing so you may need a bigger team, a huge time, and a high level of coordination and interaction among the team members that are more relevant to the situation prevailing in the organization. However, with automation, you could perform all these processes by using a predefined set of tools and configurations.

What is DevOps Automation?

DevOps Automation is the method of automating the repetitive and mundane DevOps tasks that could be executed without any intervention on humans. The Automation could be practiced in the entire wheel of the DevOps lifecycle and they are

- Software Deployment & Release

- Design & Development

- Monitoring

The main intent of DevOps Automation is to standardize the DevOps cycle by reducing the manual workload. Thus, the automation results in more key improvements,

- Enhances the Team Productivity

- Reducing Human Errors immensely

- Eliminating the requirement for large teams

- Build a fast-moving DevOps lifecycle

Also, it is important to note here that Automation in DevOps is not completely removing human intervention from the picture. Since there are possibilities where you may build the best-automated DevOps process, however, you may need human intervention or oversight to do the things when there is an update or if there is a bug in the process. Automation can only reduce the dependency of humans to handle the basic or recurring tasks in concern with the DevOps practices.

Advantages of Automation in DevOps

The Automation renders an array of benefits that helps to reach the goal of the DevOps at ease.

Consistency

Usually, the processes are highly automated and are persistently predictable. The Software Automation tool would always perform a similar thing until they are configured again to do the same thing. However, this is not applicable in case if a human is working there.

Scalability

Generally, Automation is considered to be the mother of scalability. Also, these processes are more flexible to handle numerous processes when compared to the manual way of scalability. To brief, consider the instance when you are working manually, you will be able to deploy the new releases only when you are dealing with one specific application or environment. However, when your team handles different applications and that the application is being deployed to a different environment that is more than one Cloud or OS, you can release the newer codes rapidly and consistently.

Speed

The automation here means the processes such as Code Integration and thus the Application deployment occurs at a rapid pace. To adhere to the above statement let us consider some scenarios. When you have automation deployed, you may not wait for the required person to process it, you can just simply deploy the new release or update irrespective of the time and dependency of a person. With the aid of automation tools, you could easily overcome the delay factor.

Secondly, with the in-built automated processes, you could execute the assigned work more rapidly. Over here when you have an Engineer employed, the Engineers should imply some of the criteria like checking the environment, typing out the configuration, and physically checking whether the latest version was deployed successfully. In contradiction, the automation tool could perform the operations more instantly.

The Things to be Prioritized for the DevOps Automation

Many processes and practices are found in the DevOps and it shall differ from one enterprise to the other. Here in this DevOps tutorial, we have jotted down some of the common processes that could be prioritized for the automation process.

Software Testing: In the testing process, before releasing the Software it has to undergo some testing process. However, when you perform this manually you would need more time and workforce. You can overcome this obstacle with the aid of automation test tools like Appium and Selenium With these tools, the Software Testing process is way easier and the test could be performed in a proper routine.

CI/CD: Rapid application development and delivery is the core or central theme of the DevOps concept. Also, it is much more difficult to reach the goal when you don't automate the CI - Continuous Integration, CD - Continuous Delivery process.

Monitoring: There is one major hindrance in the DevOps environment is that to keep the track of all the components in the rapidly moving environment. The automation tools could be used for checking the performance, availability, and security issues that generate the alerts based on the ability to resolve an issue.

Log Management: The total amount of the log data is developed by the DevOps environment and that it is more widespread. The process of gathering and analyzing every data by hand is not possible for many teams. Rather, we can rely on the log management solution which could robustly cumulate and analyze the log data.

The Popular DevOps Automation tools

In the case of automation, more software options are available. Both the open-source and the licensed tools support the complete automation of the DevOps pipeline. Among them, the most widely used type is the CI/CD tools.

The Chef and Puppet are used for cross-platform configuration management. These tools primarily handle the deployment, configuration, infrastructure management, automation, and management of the infrastructure.

TeamCity, Jenkins, and Bamboo are the popular CI/CD software that automates the tasks right from the beginning of the pipeline till the deployment stage. Apart from this, there are specialized tools and software that aims at a single function and this is the most crucial aspect of the DevOps pipeline

Infrastructure Provisioning: Terraform, Vagrant, Ansible

Containerized Applications: Docker, Kubernetes

Source code management: CVS, Subversion, and Git

Application/ Infrastructure Monitoring: QuerySurge, and Nagios

Security Monitoring: Splunk, Suricata, Snort

Log Management: Datadog, SolarWinds Log Analyzer, and Splunk

Also, it is possible to merge all these tools for building an all-inclusive and automated DevOps cycle.

The other major trend on migrating to the Automation and DevOps tasks is that the Cloud platform shall leverage all the power on the Cloud platform. The two important & major leaders are AWS and Azure. Both these platforms enable their users with a complete set of the DevOps services and that shall cover the entire prospects of the DevOps cycle.

Amazon Web Services: AWS CodeBuild, AWS CodePipeline, AWS CodeStar, and AWS CodeDeploy

Microsoft Azure: Azure Repos, Azure Pipelines, Azure Test Plans, Azure Boards, and Azure Artifacts.

To conclude, Automation is not only about replacing human interactions. Rather it is about thinking that automation is the tool that facilitates efficient workflow in the DevOps cycle. The automation should be primarily focusing on the processes and tasks that would provide more improvement in efficiency or performance. Further, the automation that is merged with the good DevOps workflow would lead to high-quality software with more frequent releases and increased customer retention. The DevOps Training in Coimbatore at FITA Academy imparts the students of the training course with hands-on training practices of the DevOps concepts and its tools proficiently.

Git

Here in this DevOps Tutorial, we are going to see about the Git tool application, lifecycle, and its workflow in-depth.

Git is a distributed source code management and revision control system with a focus on speed. Linus Torvalds created and built Git primarily for the Linux kernel development. It is free software distributed under the GNU General Public License Version 2 (GPLv2). The Git tool aids the developers to have a track of their history and their code files by storing them on the different versions and the server repository which is GitHub. The Git encompasses the attributes of performance, security, functionality, flexibility, and security which the predominant of the individual developers and development team would require.

Features of Git

Open Source: It is an open-source tool and it is released under the label of the General Public License (GPL)

Scalable: Git is a scalable tool and it indicates that the total number of users shall increase and Git could manage any situation easily.

Security: It is one of the most secure tools to use. It makes use of the SHA1 (Secure Hash Function) for naming and finding the objects within their repository. All the commits and files are checked and it is retrieved by the checksum during the checkout. Also, it stacks its history in the manner that the ID of a specific commit relies on the entire development history and it paves the way up to the specific commit. If at all it is published once you can bring it back to its older version.

Speed: The Git tool is so fast that it is capable of accomplishing all the tasks that have been assigned in a specific time. In general, a large number of Git operations are performed mainly in the local repository and it offers more speed to its users. It also offers a centralized version control system that constantly communicates with the server at some place or the other. According to the Performance tests that are conducted by Mozilla it was stated that Git was extremely fast in its application when compared with other Version Control Systems.

Also, it is mentioned that the fetching of the version history from the locally saved repository was way faster than getting it from the remote server. Also, the thing to be noted here is that the foundation of Git is written in the C and C++ language primarily ignores the runtime overheads that are related to the other high-level languages. Since Git was developed with the motto to work majorly on the Linux kernel, hence this is capable of managing large repositories efficiently. Right from the speed to the performance the Git has outperformed its competitors well.

Distributed: One of the important features of Git is it is distributed. The term distributed here means rather than switching the specific project to other machines we shall create the "clone" for the complete repository. Further, instead of having just one central repository, you can just send the changes to all the users who have their repository that is stacked in the commit history of a project. Also, you can not connect to the remote repository for change. The change is just stacked in the local repository whenever it is needed you could push those changes to the remote repository.

Supports Non-Linear Development: The Git supports uninterrupted merging and branching. It supports navigating and visualizing non-linear development. The branch of the Git depicts the single commit. Also, we could construct the complete branch structure with the support of the parental commit.

Branching and Merging: These are the prime features of Git and this makes it look more different from other SCM tools. The Git permits the creation of multiple branches and thus it does not affect each other. We can also perform the task such as creation, merging, and deletion on the branches. Also, it only takes a few seconds to perform this. Some of the important factors that could be achieved using the Branching are,

- You can create a separate branch for the new module of a project commit and you can delete them whenever you need them

- Also, you can have the production branch that always does what it shall get in the production and that it could be merged for testing the branch

- You can also build the demo branch for checking and experimenting with its functioning. Also, it could be removed whenever it is required

- The main benefit of branching is that we can push something to the remote repository and we are not required to pull all the branches into it. Also, you can choose some of the branches or all together.

Staging Area: This area is the unique functionality of Git. It could be contemplated as the preview of the next commit and further the intermediate area where the commits could be reviewed and formatted before completing them. When you make the commit, Git modifies the changes that are on the staging area and further makes them as the new commit.

Further, we are permitted to remove and add the changes right from the staging area. The staging area is treated to be the place where the Git shall stack the changes. Though Git does not have any specific staging directory you can stack some of them to the objects that depict the file changes. Over here, Git uses the file which is called index.

Data Assurance: The Git Data model ensures the cryptographic integrity of all the units in the project. It offers the unique commit ID for all the commits via the SHA algorithm. Also, you update and retrieve those commits by the commit ID. Also, the large number of the Centralized Version Control system shall not offer any integrity by default.

Preserves a clean History: The Git promotes the Git Rebase. It is the most important feature of Git. Also, it allows you to get the recent commits from the master branch and then place your code on the top. Git maintains the neat history of your project.

Benefits of Git Version Control

Here in this DevOps tools tutorial, we have enlisted some of the important benefits of Git Version Control.

Functions Offline: Git offers its users the most convenient options like permitting them to work both on the online and offline mode. With the other version control system like the CVS or the SVN, the users may not have to access the Internet to connect to the central repository.

Restores all the deleted commits: This feature is useful while dealing with important projects and when you are trying some of the experimental changes.

Undoes mistakes: Git permits you to undo your commands in every situation. You can correct the last commit for small changes and you can revert the complete commit for the unnecessary changes.

Offers flexibility: Git supports its users with various Nonlinear development workflows for all the small and large scale projects

Security: Git offers protection over all the secret alteration of any file and its aids to maintain the authentic content of the history of a source file.

Guarantees Performance: Since its a distributed version control system it provides an optimized performance owing to its features namely merging, branching, committing to new changes, and comparing the older versions of a source file.

Lifecycle of Git

Here in this DevOps tools tutorial let us see in-depth about the lifecycle of the Git tool

Local Working Directory: This is the first stage of the Git project lifecycle and this is the local working directory where your project resides and this shall not be tracked.

Initialization: For initializing the repository, we can use the command git init. By using this command, you can make the Git notified of the project file that is found in the repository.

Commit: Also, now you can commit all the files by utilizing the git commit -m 'our message' command.

Staging area: Here your source code files, configuration files, and data files are tracked by Git and you can add those files which you need to commit into the staging area by using the git add command. This course can also be called indexing. The index comprises the files that are added in the staging area.

Git Workflow

Here in this DevOps tutorial for beginners, you will be introduced to the various workflow options that are available in Git. When you get familiar with these workflows, you can easily choose the right workflow that is apt for your team project. Based on the team size, you can choose the exact Git Workflow which is appropriate for your team, and it increases the credibility of your project as well as increases your productivity.

Centralized Workflow: In the Git Centralized workflow, there is only space for the development branch and this is called the master, and all the changes are delegated into this one branch.

Feature of Branching Workflow: With the feature of the Branching workflow, the feature of the development shall occur only in the specific feature of the branch. The image that is given below shall depict the functioning of the branching workflow

Git Workflow: Rather than a Single Master branch, the Git Workflow shall make use of the two branches. And here the Master branch stacks the authentic release history and the second 'develop' branch functions as the integration branch for their features. The below image explains the Git Workflow:

Forking Workflow: In terms of the forking workflow, the contributor has two Git repositories and they are one private local repository and the other is the public server-side repository. The DevOps Training in Bangalore at FITA Academy helps the students to enrich their knowledge of the DevOps concepts and its tools under the mentorship of real-time professionals.

DVCS Terminologies

Local Repository: All the VCS tools offer the private workplace for the working copy. The Developers shall make the changes on the private workplace and after the commit, those changes take part in the repository. Git takes it one step higher by offering them the private copy as the complete repository. The Users shall perform more operations with this repository like adding the file, renaming the file, removing the file, commit changes and moving the file.

Index or Working Directory & Staging Area

It is only in the Working Directory where all the files are verified. Whereas in the other CVCS, the developers usually make the modifications and they commit their changes straightly to a repository. However, Git makes use of different techniques and strategies. In general, Git won’t necessarily track all the modified files. At any point in time if you perform a commit operation, then Git searches only for the files that are found in the staging area.

DevOps Note: In this process, only the files that exist in the staging area are treated for the commit and not every modified file.

Below we have given the outlook of the fundamental workflow of the Git

Step 1- From a working directory, modify the specific file.

Step 2 - Place the changed files in the staging area now.

Step 3 - Commit operations are used to move files from the staging area to the production area. When the push process is finished, the changes are permanently stacked in the Git repository.

DevOps Notes:

In case, if you have modified two files - "sort.g" & "search.g". Now if you need two different commits for every operation then you can add one of the files on the staging area and you can perform the commit. Once you are done with one specific commit, you can repeat the same process for the next file.

Blobs: The expansion of the term Blob - Binary Large Object. All the versions of the file are characterized using a blob. The blob holds all the file data. Also, it will not consist of any metadata of a file. This is the binary file and in Git Database this is called the SHA1 hash of that specific file.

DevOps Notes: In Git, generally the files won't be addressed by the names rather all are content-addressed in the Git.

Trees: The tree is the object that embodies the directory. It has blobs and the other sub-directories. The tree is the binary file that stores all the references to the trees and blob.

Commits: The Commits consist of the current position of a repository. The commit is also called by SHA1 hash. Also, here you can regard the commit object as the node that is linked to the list. All the commit objects consist of a pointer to the corresponding parent commit object. It is possible to peek into the history of a commit by traversing back and looking into the parent pointer from any given commit. When a commit is made up of multiple parent commits, the two branches are merged to create the final commit.

Branches: Branches are commonly utilized to create the other development line. The Git by default consists of a master branch that is similar to the trunk in the subversion. Mostly, a branch is developed with the intent to function on the new feature. If the feature is developed completely, then this is combined back to the specific master branch and you can delete that branch. All the branches are accredited by the HEAD and this helps in pointing to the recent commit that is found in the branch. Irrespective of how many times you make a commit, the HEAD is always updated with the commit that is recently created.

Tags: It helps in assigning useful names with the specific version that is found in the repository. The tags are similar to the branches however the fundamental difference is that the tags are immutable. It indicates that the tag is the branch that nobody prefers to modify. Once if you have created a specific commit, even when you create a new commit, then it would not be updated. Generally, the Developers create these tags mainly for product release.

Clone: The Clone is the operation that shall create the instanceof therepository. The Clone operation does not check for any working copy however, it reflects the complete repository. The Users could perform more operations with the aid of a local repository. And here the Networking gets involved only when repository instances are synchronized.

Pull: The Pull Operation copies the changefrom the remote repository instance to the local one. The Pull Operations are used for the synchronization of two repository instances. It is the same as the update operation in the Subversion.

Push: The Push operation copies the changes from the local repository instance to the remote one. It is mainly used for stacking the changes constantly in the Git repository. It is the same as that of the commit operation in the Subversion.

Head: The HEAD is the pointer that points to the recent commit that is found in the branch. When you make a commit, the HEAD is updated on the recent commit. The heads of the branches are stacked in the .git/refs/heads/directory.

Revision: The Revision depicts the different versions of a source code. The Revision in Git is characterized by the commits. These are the commits that are found by the SHA1 secure hashes.

URL

The URL depicts the location of the Git Repository. The Git is the URL that is stacked in the config file.

Jenkins

In this DevOps Tutorial session, we have primarily focused on the Jenkins tools functions and features in-depth. Jenkins is the Software that permits the users to perform continuous integration on the software/application life cycle.

What is Jenkins?

Jenkins is an open-source automation tool that allows for continuous integration and is based on the Java programming language. The Jenkins test and builds software projects which persistently makes it simpler for a developer for integrating the changes in a project and thus makes it easier for the users to gain the fresh build. It also enables you to provide software on a continuous basis by combining it with a variety of deployment and testing platforms.

With the aid of Jenkins organizations, could easily boost the software development process via automation. Jenkins also helps in integrating the development life-cycle processes of different kinds and it includes test, document, package, stage, deploy, build, static analysis, and many more. Jenkins helps in accomplishing the Continuous Integration with the support of the Plugins. The Plugins permit the integration of different DevOps stages. In case if you need to integrate the specific tool, you should install the plugins properly for that specific tool. For instance Amazon EC2, HTML Publisher, and Maven 2 Project.

For instance: In case if any organization is working on developing the project, then Jenkins will help in continuous testing of your project and it helps in project building and it showcases how the errors have taken place in the initial stages of development.

Attributes of Jenkins

The below are some of the interesting details of Jenkins which makes it the best tool for Continuous Integration when compared with other tools.

Adoption: The Jenkins is spread all across the globe and it has over 1,47,000 active installations with a total of 1 million users across the world.

Plugins: The Jenkin is a well-interconnected tool with 1,000+ Plugins that permits you to integrate the predominant development, testing, and deployment of the tools. Also, it is clear from the above that Jenkins is demanded immensely across the globe.

Before diving deep into the DevOps tools tutorial Jenkins, let us have a clear understanding of what is Continuous Integration.

The term Continuous Integration is the development practice in which the developers and others are required to commit the changes on the source code and also in the repository that is shared numerous times periodically in a day. All the Commit is made on the repository and then only it is built. It permits the team to check the problems at an earlier stage. Further, upon relying on the Continuous Integration tool, you have numerous other functions like deploying and building the applications on a test server for helping the concerned team with the required builds and test results.

Continuous Integration with Jenkins

Over here, let us consider a situation where the entire source code of an application is built, developed, and deployed on the server for testing purposes. On the outlook, it may seem to be a perfect method for developing software, however, this process incurs many issues and we have listed them below.

- The Developer team should wait until the complete software is developed to test the results.

- It has a good chance of succeeding in general, and the test will uncover several flaws. The developer’s task of detecting those issues was made harder by the fact that they had to cross-check the entire source code for the application process.

- It slows down the complete software delivery process

- The continuous feedback which is connected to the things such as coding issues, building failures, test status, architectural issues, and other file release and uploads that were found missing and this immensely impacts on the quality of a software.

- The entire process was manual and it increased the peril of constant failures.

From the above-mentioned points, it is evident that the problems not only impacted the latency of the software delivery process it also affected the quality of the software. It leads to increased customer discomfort.

To overcome such issues, the organizations were in dire need of a competent system where the developers could frequently trigger the build or test for all the changes that are made on the source code. Over here Jenkins came as the rescuer for the developers. Jenkins is one of the most mature and high-level CI tools that are found in the market. The DevOps Training in Hyderabad at FITA Academy enables the students of the DevOps training program to obtain an in-depth understanding of the DevOps concepts and its tool in real-time applications with certification.

To have a precise understanding of Continuous Integration with Jenkins the flow diagram that is given below will help you to understand it.

In the above illustration, the Developer should commit the code to the corresponding source code repository. Also, Jenkins would check in the repository during regular intervals for modifications.

- Once the commit takes place, then the Jenkins server shall find the changes that have taken place in the source code repository.

- Then the Jenkins would extract those changes and shall begin to prepare the new build.

- In case if the build fails, then those respective teams would be notified.

- In case if the build is successful, then the Jenkin Server shall deploy the built-in after the test server.

- Once the testing is done, the Jenkin server shall generate the feedback, and then it could notify the respective developers of the build and test results.

- Also, it would continue to check the source code of a repository to bring in the changes that are initiated at the source code level, and then the complete process shall keep repeating itself.

Merits on using the Jenkins tools

- Jenkins is an Open-source tool and also it is completely free to use

- You are not required to spend any additional charges for components or installation set-up.

- Jenkins is a user-friendly software which means you can easily install & configure them

- Jenkins is capable of supporting 1,000 or above plugins and this eventually eases your workload. In case if there is no Plugin then, you can write the script for it and also share them with the respective community

- As Jenkins is built using Java it is highly portable

- Jenkins is a platform-independent tool and it functions efficiently on all operating systems like Windows, Linux, and OS X

- You have huge community support as it is an open-source software

- The Jenkins also renders the support of the Cloud-based architecture with which you can deploy the Jenkins on all the Cloud platforms.

Architecture of Jenkins

Jenkins Single Server: The Jenkins Single Server was not sufficient to meet some of the criteria like:

At times you may need numerous different environments for testing your builds. It is impossible to be achieved with a Single Jenkin Server.

When heavier and larger projects are built on a continuous basis then the Single Jenkins Server can not handle the complete load efficiently.

So, to address the above-mentioned requirements, Jenkins bought the Distributed Architecture into the play.

Jenkins Distributed Architecture

Jenkins manages distributed builds using the Master-Slave Architecture. The Master and Slave communicate using the TCP/IP protocol.

Jenkins Master

Jenkins' Master is the main server. The Master's job is to manage the below activities

- Scheduling the build jobs

- Rapidly send the builds to the corresponding slave for actual execution

- To check the Slaves

- To keep track of and present the build's progress

- The Master instance of the Jenkins could be used for executing the build jobs directly

Jenkins Slave

- The Slave is the Java Executable

- A Java executable that executes on a distant machine is known as a slave. Jenkins Slaves have the following characteristics:

- It pays attention to the Jenkins Master Instance's demands

- Many operating systems are incompatible with the Slaves

- The Job of a Slave is to execute the functions as they are instructed and it involves building the jobs that are dispatched by Master

- Also, you can configure the project for running the specific Slave Machine or just allow Jenkins to choose the next available Slave

The image given below will clearly explain it. The Jenkins consists of the Masters that are capable of handling the three Jenkins Slave

Functioning of Jenkins Master and Slave

Look deep into the example, where we make use of Jenkins for testing various environments such as MAC, Windows, and Ubuntu. The diagram given below would depict the same

The above diagram depicts the following

- The Jenkins check the Git repository only during the periodic intervals for the changes that are made in the source code

- The builds need a different testing environment and it is not feasible when you are doing it on a single Jenkins Server. To perform the testing in various environments, Jenkins uses different kinds of Slaves as interpreted in the above diagram

- Also, the Jenkins Master request those Slaves to exhibit the testing and generating the test reports

Downsides of Jenkins

Jenkins too has its share of demerits. Some of them are:

Developer Centric: Jenkins focuses more on the feature-driven and developer-centric prospects. The person should have some kind of developer experience to make use of Jenkins.

Setting Change Issues: There are few issues like Jenkins not starting up and that you may face when you have to change its settings in Jenkins. The issues could arise when you are required to install the plugins as well. Luckily, Jenkins has a wider user base so you could easily search for an online solution when you are faced with some issues.

Jenkins Applications

Jenkins aids its users to accelerate and automate the Software development process rapidly. Below are some of the common applications of the Jenkins

Increased Code Coverage: The Code Coverage is generally identified by the total number of lines of the code that a component consists of and how far it is being executed by them. Jenkins generally increases the coverage of the code and it ultimately encourages the transparent development process over its team members.

No Broken Code: Jenkins makes sure that the code is tested and that it is good. Jenkins also ensures that it has gone through continuous integration. Finally, the code is merged only when the entire test turns out to be successful. It also ensures that no broken codes are shipped into the production.

Key Features of Jenkins

The Jenkins offers its users more attractive features and some of them are listed below:

Easy Installation: Jenkins is a Java-based, self-contained, and platform-agnostic application that is ready to run on a variety of platforms, including Mac OS, Windows, and Unix-like OS.

Easy Configuration: It is easy to set up the Jenkins and configure them using the Web interface features for checking errors and also for built-in help functions.

Available Plugins: There are over a hundred available plugins in the Update Center that are used for integrating each tool that is found in the CI and CD toolchain.

Extensible: The Jenkins can be extended by different means such as Plugin Architecture to offer endless possibilities to its users.

Ease of Distribution: The Jenkins could easily share its work over different machines for faster testing, building, and deployments over different platforms.

Free Open Source: The Jenkins is the Open-source resource pool that is backed by large community support

Ansible

Here in this DevOps tools tutorial, we are going to see about the Ansible tool. This DevOps Tutorial for Beginners provides a fair understanding of the Ansible tool and its role in the DevOps process. Before going in-depth first let us know what Ansible is all about?

What is Ansible?

Ansible is the open-source IT automation engine that automates applications such as deployment, provisioning, orchestration, configuration management, and many other IT processes. By using Ansible you can easily install the Software, enhance security & compliance, automate the day-to-day task, patch systems, provisioning infrastructure, and share the automation over your organization.

The Ansible is easier to deploy as it does not need any customer security infrastructure or user agents

The Ansible makes use of the Playbook to explain the automation jobs and the playbook makes use of a simple language called YAML which could be easily read, written, and comprehended by humans.

The Ansible is designed in the format of multi-tier deployment. Ansible does not handle only one system at a time, it is capable of modeling the IT infrastructure by defining all your systems and it is interrelated. On the whole, Ansible is agentless and it means that Ansible operates by connecting your nodes via ssh(by default). However, when you need the other mode of connection namely Kerberos, then Ansible provides you that option.

Advantages of using Ansible

Agentless: The Ansible does not require any agents to be installed on its remote system for management. It means it has significantly fewer performance issues and maintenance overhead. Ansible primarily makes use of the push-based approach to leverage the existing SSH connections for running the task on the remotely handled host. The Chef or the Puppet would work by installing the agent on the host to handle and thus the agent pulls the changes right from the control host by using its channel.

Ansible is built using Python: The Ansible framework is written in Pythonand it implies that installation and running of the Ansible in any of the Linux distributions is easy. Python being a popular programming language, there are higher possibilities that you could easily understand and become familiar with this tool and that you have a large back-up to help you out at any time during the DevOps process.

Deploys the Infrastructure in the Record time: The Ansible could possibly send the tasks to different remotely handled hosts concurrently. It signifies that you can execute all the Ansible tasks within seconds and handle the host without having to wait for it to be completed on the first for reducing the provision time and for deploying the infrastructure robustly than ever before.

Ansible is easier to understand: The key highlight of Ansible is its learning curve. Any beginner can effortlessly comprehend the Ansible tool. Also, the thing you have to note here is that troubleshooting in Ansible is simple and also the chances of committing an error are meager here.

Ansible Workflow

Ansible generally functions by bridging your nodes and pushing them out to the small program termed Ansible modules. Once these modules are executed it shall remove them when it is accomplished. Also, the Library of these modules shall nest on any of the machines and there wouldn't be any daemons, databases, or servers needed.

From the above image, it is clear that the Management Nodes are the Controlling Node and it handles the complete execution of a playbook. The inventory file offers the set of hosts where the Ansible has to be run. The Management Node enables the SSH connection and it executes all the small modules of the host machine and thus installs the software.

The inventory file contains a list of hosts on which Ansible modules should be installed. . The Management Nodes utilizes the SSH connection and executes small modules on a host machine for installing the software. The Ansible also removes the modules once when these are installed properly. Also, it connects with the host machine for executing the instruction, and once when it is installed successfully, then it shall remove that specific code in which the actual code has to be copied to the host machine.

Important Terminologies of Ansible

Task: The task is the part that encompasses a single procedure that has to be accomplished

Module: Generally, the Module is the command or a group of similar Ansible commands that are expected to be executed from the client-side.

Ansible Server: This is the Machine where Ansible is stored and only from here all the playbooks and tasks are run.

Role: This is the method of aligning the tasks and other files that have to be called later in the playbook.

Inventory: This file consists of data also about the Ansible Client-Server.

Fact: The details are gathered from the Client System from global variables along with gather-facts operation

Handler: This task is called only when a notifier is identified

Play: This is the Execution of Playbook

Tag: It is the Name set of the task and it could be used later for just issuing certain group tasks or specific tasks

Notifier: This part is attributed to the task that shall call the handler and when the output is modified. The DevOps Training in Pune at FITA Academy provides a wider understanding of DevOps Concepts in cohesion with Agile and Lean mode of IT Operations. Also, the DevOps Training at FITA Academy covers the wide range of tools that are prevalent in the industry.

Ansible Architecture

Ansible Architecture is adaptable and it is a lightweight IT automation engine for automating application deployment, intra-service orchestration, cloud provisioning, configuration management, and other IT tasks. Since it was designed with the intent of multi-tier deployments right from its inception, Ansible helps in modeling your IT infrastructure by defining how all your systems interrelate instead of focusing on a single system at a time. Agents or additional custom security infrastructure are not used by Ansible.

And it is easy to deploy the most important and simple language YAML in the method of the Ansible Playbooks. It permits you to define the automation jobs in the method that accesses plain English.

Here in this DevOps tutorial, we will ride you with a quick outline of how Ansible functions and how it laces the rest of the pieces together.

Modules

The Ansible operates by connecting all your Nodes and then pushes out those scripts by calling the Ansible Module. A maximum of the Modules accepts the parameters that are defined in the state of a system. The Ansible later executes all these modules and discards them when it is completed. Also, the library of your modules shall nest on any machine and you need no databases or servers.

In Ansible you can write your modules, however, you must initially consider whether you need that in the first place. Here in Ansible, you will be permitted to work with all your favorite text editor, terminal programs. Also, you can have a VCS to monitor all the changes that take place in your content constantly. You shall write any of the Specialized modules on any of the programming languages (Python, Ruby, Bash, etc) and this could return to the JSON.

Module Utilities

When Multiple modules utilize the same code, then the Ansible shall stack all these functions as the module utilities for reducing the duplication and handling the maintenance. For instance, the code which parses the URLs is lib/ansible/module_utils/url.py. Similarly, you can write your module utilities. DevOps Notes: Mostly, the Module utilities are written in PowerShell and Python.

Plugins

The Plugins amplify Ansible's Core functionality. Plugins are executed on the control node identified within the/usr/bin/ansible process when modules are executed on the target system in a unique process. The Plugins provide the extension, option, and the core feature of Ansible to connect to inventory, transform data, and logging output. The Ansible ships many handy plugins so you can easily write your plugin. For instance, you can write the inventory plugin for connecting any data source and it shall return to the JSON. The Plugins are generally written in Python.

Inventory

Ansible by nature depicts the machine that it shall handle in the file and gathers every machine in a group which you have chosen.

For adding new Machines, you require no additional SSL for signing in to the Server, and it involves no hassles that are used for concluding which specific machine it has not to get linked up. It is due to the DNS and NTP issues. Other pieces of information help you in infrastructure. The Ansible shall also connect them to that. The Ansible will allow you to draw the group, inventory, and variable details from the sources such as OpenStack, Rackspace, EC2, and much more.

For instance, this is how the Plain text of the inventory file looks

If the inventory hosts are listed, then the variables could be assigned into the simple text like (in a subdirectory called ‘group_vars/’ or ‘host_vars/’ files or straightly into an inventory file).

Also, as stated earlier, you can use the dynamic inventory for pulling your inventory from the data sources such as OpenStack, EC2, and Rackspace.

PlayBooks

The Playbooks could easily orchestrate different slices of your infrastructure topology. With the detailed control of how the machines tackle at different times. The Ansible approach of orchestration is remarkably known for its fine-tuned simplicity. Also, it is believed that your automation code would make clear sense to its users down the years and that it consists of only less special syntax to remember them.

Networking

The Ansible is used for automating different networks and this uses the simple, powerful, secure agentless automation framework for IT development and operations. It makes use of the type data model that is separated from the Ansible Automation engine and it spans various hardware easily

Hosts

The architecture of Ansible hosts is the Node systems that are automated using Ansible and machines like Linux, Windows, and RedHat.

APIs

The Ansible APIs function as the bridge of Public and Private cloud services.

CMDB

The CMDB is the kind of repository that acts as the data warehouse for IT installations

Cloud

The Cloud is the Network of Remote servers in which you are permitted to store, process, and handle the data. Usually, these servers are hosted on the internet and it stores the data remotely instead of the local server. It is just used for launching the instances and resources on the cloud and also to connect with the server. So, you are required to have profound knowledge of operating all your tasks remotely.

Ansible in DevOps

As you know in DevOps, the Development and Operation team functions are integrated. The integration is a major factor for modern test-driven and application design. Also, Ansible helps in integrating it by providing a stable environment for both the Operations and Development and it results in continuous orchestration.

Now, let us see how Ansible handles the complete DevOps infrastructure. As soon as a Developer thinks of infrastructure as a component of their application which is nothing but the (IaC) - Infrastructure as Code, performance and stability would turn out to be normative. The IaC is the method of provisioning and handling the compute infrastructure (Virtual Server, Bare-Metal Servers, and Processes) and its configuration via machine-processable definition files, instead of using interactive configuration tools or physical hardware configuration. It is here, where Ansible occupies a prominent position in the DevOps process and thus outperforms its peers.

In the DevOps process, Sysadmins primarily function in sync with the developers and so the development velocity is also enhanced significantly. Further, more time is spent on activities namely experimenting, performance tuning, and thus getting the work done. Also, Ansible takes less time when compared to its competitors. Now go through the image that is given below you will learn how the tasks of sysadmins and others are used for simplifying the Ansible.

Hence, these are the major reasons behind the growing popularity of Ansible in the DevOps process.

Docker

Containerization is a technology which is there in the industry for a longer period. However, with the advent of Docker, this technology has seen paramount growth in the industry. Now let us see Why Containerization is being used widely.

The Containers support the organizations with a logical packaging mechanism and in this, the applications could be briefed from the environment on which they are installed. This decoupling permits the container-based applications seamlessly to be deployed irrespective of their environment. It can be either Public Cloud or the Private Data Center. It can also be an individual's laptop. It gives the developers all the power to create predictable environments and it could be easily detached from the rest of the applications and it can run anywhere. In the aspect of Operations standpoint, besides portability, the container also provides granular control over the resources for giving you the infrastructure to enhance your efficiency and it shall result in the better optimization of your resources that has to be computed. Owing to these enormous benefits, the adoption of containers is widespread in the industry. And over here, Docker is one of the popular tools that is being widely adopted.

Here in this DevOps Tutorial session, we are going to see about Docker, the popular Containerization tool in the industry.

The popularity of Docker has grown tremendously over the past few years and it has certainly created a revolution in the traditional model of software development. The Docker's Container permits the users to scale up immensely and thus it is one of the user-friendly tools. Here in this DevOps for beginners, we are here to make you understand Docker containers, needs, benefits, and environment in-depth.

What is Docker?

Docker is the advanced OS virtualization software platform that enables its users to easily create, run, and deploy applications in the Docker container. The Docker Container is a comparatively lightweight package that allows the developers to pack up the applications and deploy them one by one with the aid of the inbuilt libraries and dependencies. Docker supports immensely in the acceleration and simplification of the workflow. It permits the developers to select the project-specific deployment environment for all projects with various sets of application stacks and tools.

Docker is simple to use and it is highly time-saving. Docker could be easily integrated with the existing environment. Hence, it offers the best portability and flexibility to run the application on different locations be it an on-premise or public, or private cloud service. Docker offers the best solution for the creation and deployment of an application. This Docker tutorial guides you in getting a comprehensive understanding of Docker and its important components.

Application of Docker

Docker is the best tool that is curated specifically for system administrators and developers. Docker is used in multiple stages of the DevOps life cycle for the robust deployment of applications. It permits the developers to build the application and its packages with necessary dependencies in the Docker run container and it allows it to run in any environment.

Docker permits you to develop an application and also its supporting components effectively using the containers. Generally, these containers are lightweight and are capable of installing themselves in the host machine's kernel. Further, it permits running one or more containers in a single container. It offers a free and detached environment that is safe to run different containers at a time on a specific host. Below we have enlisted the places where the Docker tool comes in handy for its users.

Standardized Environment: Docker permits the developers to function in a standardized environment and it helps in aligning the development lifecycle and it reduces the disparity among different environments. Docker is a remarkable tool that is used for Continuous Integration and Delivery workflows which enables the development environment to look more repeatable. Hence, it ensures that all the members of the team are in the same environment and that they are aware of the team members who function in the same environment. Also, this tool notifies its members easily of the development and changes that take place in each stage.

Disaster Recovery: In an unanticipated situation, you could freeze the software development cycle and this could affect the progress of the business severely. However, with Docker, it could be easily reduced. Docker permits the functionality to easily duplicate the Docker image or file to new hardware and it helps in restoring them in case of future references. If there are rollbacks for any specific version or feature, Docker is highly useful to regress the recent version of the Docker images fastly.

It permits deploying the Software without having to think of accidental events. It supports as a great backup for the configuration and hardware failure in case of any workflow disruptions. Also, it helps in resuming the work quickly.

Consistent and Robust Delivery of Applications: Docker permits the testing, developing, and deployment of the applications at a faster pace. The SDLC is generally a long one since it includes testing, identifying bugs, making required changes, and deploying them to see through the final results. Docker permits the developers to identify the bugs at the initial stage of the development process as they could be rectified immediately in the development stage before deploying them to the testing and validation stage. Hence, update of is faster Docker and easier to deploy them by just pushing into the production environment.

Code Management: Docker offers the feature of a highly portable environment in which you could easily run different Docker containers in one specific environment. Docker is capable of balancing between the testing and production environments with the aid of code management. It offers a steady environment for both code development and deployment. It also helps in streamlining the DevOps by standardized configuration interface and thus makes it available to all the members of the team. The Docker containers have enabled the development process to be more scalable and user-friendly. Hence, it safeguards that the interface is standardized for every team member. DevOps Training in Gurgaon at FITA Academy is an intrinsic course devised with the intent to make the students familiar with the best DevOps process and the tools that are prevalent in the industry.

Docker Container vs Virtual Machines